Why I Love Human (Primate) Vision

My love for all things brain really began in the classroom of Dr. Victor Johnston at New Mexico State University. His undergraduate honors class -- The Human Mind -- changed me like no other. Perhaps I can explain the effect this class had on me by telling you that I didn't have to leave my chair to put my hands on the notes from that class ... that I took in Spring semester 1995.

According to my notes, my first real introduction to human vision came on March 28, 1995. In these notes, I have written things such as "Dominant sensory is visual. Sensitive to electromagnetic radiation. Our brain separates these energies and magnifies the difference." Simply put, if you look at the entire spectrum of electromagnetic radiation, the difference between red and violet is infinitesimal. Yet we see all the difference in the world.

This electromagnetic radiation falls on the rods and cones of the retina. The rods and cones feed into retinal ganglion cells, which and transfers back toward the lateral geniculate nucleus of the thalamus (among other places). The thalamus is "the gateway to the cortex," so most senses (but not smell) must pass through the thalamus to make it to the neocortex. In the case of vision, the LGN projects to the very back of the brain, a part of the cortex often called V1, also known as primary visual cortex, or Brodmann area 17. Reach around and put your hand on the back of your head. This is where conscious vision begins.

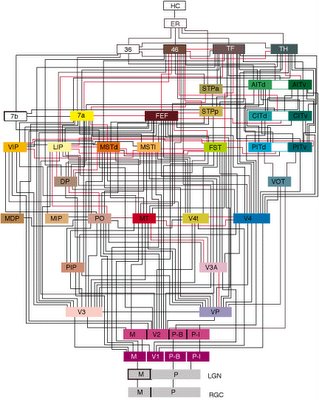

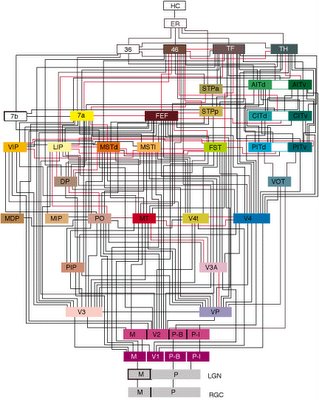

What fascinates me is both the function and efficiency of the primate visual system. The retinal ganglion cells are largely characterized by center-surround receptive fields. So, these cells do not see "things." They see dark spots surrounded by light circles, and vice versa. Moving back toward V1, we start to see cells with receptive fields that respond to bars of light in a particular orientation. To your early visual cortex, the visual world is nothing more than little bars of light at all different angles. It gets more complicated from here, and somehow it comes together as the conscious picture in your head. To get an idea of the complexity, see the so-called Van Essen diagram below.

This wiring diagram of primate vision begins at the bottom with retinal ganglion cells and works its way forward to the hippocampus in the medial temporal lobe.

In itself, this is fascinating to me. But the raw computational power contained therein has an enduring grip on my fascination. Light falling on the retina is registered in V1 approximately 100 ms later. Conscious object recognition in the temporal lobes happens about 150 ms later, or 250 ms after the light hits the retina. And at least in the center of our gaze, we see with incredible definition.

My first run-in with this computational power came when I tried to simulate an artificial neural network to detect scene changes in television programming. I reduced the television input to what I thought was a modest resolution: 720 x 480. However, if you do the math, this means 345,600 pixels. In a neural network, units connect to other units. So, 345,600 inputs connecting to a modest 100,000 units at the next layer (e.g., the thalamus) results in 34,560,000,000 connections. You can imagine my (albeit naive) surprise that even supercomputers used for nuclear simulations cannot handle this in real time. So, our best computers cannot do what you and I do in real-time every day.

Setting up my lab, I had to buy a special $100 digital video cable to run the 16 feet from the computer to the 19-inch LCD monitor that experiment participants will watch. Regular cables could not handle the bandwidth. Visual information is just too plentiful. And this morning, I came to this topic watching my DVD authoring software take 4 hours to encode a 1 hour program. We have found software that will play digital video content in real time; however, processing that content is another matter.

Yet, we do it in real-time, and it feels effortless. Millions of neurons are firing across many, many millions of connections (i.e., synapses) to process the pixels on your monitor. In the limit, we have found some interesting trade-offs that the human system makes to keep up this efficiency. In the meantime, it will keep on fascinating me.

According to my notes, my first real introduction to human vision came on March 28, 1995. In these notes, I have written things such as "Dominant sensory is visual. Sensitive to electromagnetic radiation. Our brain separates these energies and magnifies the difference." Simply put, if you look at the entire spectrum of electromagnetic radiation, the difference between red and violet is infinitesimal. Yet we see all the difference in the world.

This electromagnetic radiation falls on the rods and cones of the retina. The rods and cones feed into retinal ganglion cells, which and transfers back toward the lateral geniculate nucleus of the thalamus (among other places). The thalamus is "the gateway to the cortex," so most senses (but not smell) must pass through the thalamus to make it to the neocortex. In the case of vision, the LGN projects to the very back of the brain, a part of the cortex often called V1, also known as primary visual cortex, or Brodmann area 17. Reach around and put your hand on the back of your head. This is where conscious vision begins.

What fascinates me is both the function and efficiency of the primate visual system. The retinal ganglion cells are largely characterized by center-surround receptive fields. So, these cells do not see "things." They see dark spots surrounded by light circles, and vice versa. Moving back toward V1, we start to see cells with receptive fields that respond to bars of light in a particular orientation. To your early visual cortex, the visual world is nothing more than little bars of light at all different angles. It gets more complicated from here, and somehow it comes together as the conscious picture in your head. To get an idea of the complexity, see the so-called Van Essen diagram below.

This wiring diagram of primate vision begins at the bottom with retinal ganglion cells and works its way forward to the hippocampus in the medial temporal lobe.

In itself, this is fascinating to me. But the raw computational power contained therein has an enduring grip on my fascination. Light falling on the retina is registered in V1 approximately 100 ms later. Conscious object recognition in the temporal lobes happens about 150 ms later, or 250 ms after the light hits the retina. And at least in the center of our gaze, we see with incredible definition.

My first run-in with this computational power came when I tried to simulate an artificial neural network to detect scene changes in television programming. I reduced the television input to what I thought was a modest resolution: 720 x 480. However, if you do the math, this means 345,600 pixels. In a neural network, units connect to other units. So, 345,600 inputs connecting to a modest 100,000 units at the next layer (e.g., the thalamus) results in 34,560,000,000 connections. You can imagine my (albeit naive) surprise that even supercomputers used for nuclear simulations cannot handle this in real time. So, our best computers cannot do what you and I do in real-time every day.

Setting up my lab, I had to buy a special $100 digital video cable to run the 16 feet from the computer to the 19-inch LCD monitor that experiment participants will watch. Regular cables could not handle the bandwidth. Visual information is just too plentiful. And this morning, I came to this topic watching my DVD authoring software take 4 hours to encode a 1 hour program. We have found software that will play digital video content in real time; however, processing that content is another matter.

Yet, we do it in real-time, and it feels effortless. Millions of neurons are firing across many, many millions of connections (i.e., synapses) to process the pixels on your monitor. In the limit, we have found some interesting trade-offs that the human system makes to keep up this efficiency. In the meantime, it will keep on fascinating me.

FOLLOW SAM ON TWITTER

FOLLOW SAM ON TWITTER

SUBSCRIBE TO THIS FEED

SUBSCRIBE TO THIS FEED

0 Comments:

Post a Comment

<< Home